WhatsApp, like many other messenger apps, allow users to send voice messages. Voice messages are a way for people to send messages quickly instead of typing it out. The only problem is that the person receiving the message might not find it as convenient to listen to it, like if they're in a meeting, in class, and so on.

That could change in a future update to the app. According to WABetaInfo, it appears that WhatsApp is working on a new feature for voice messages. This new feature introduces the ability to transcribe a voice message, meaning that it takes the audio recording and turns it into text.

In addition to it being convenient, it's a great accessibility feature too. Since there are some people with hearing impairments, being able to transcribe a voice message to text will allow them to know what the message says. At the moment, it looks like only five languages are supported.

This includes English, Spanish, Portuguese (Brazil), Russian, and Hindi. We expect WhatsApp will eventually introduce support for more languages, but for now, this is what we have. The feature isn't live yet and is only available to beta testers, so we might have to wait a bit before it goes live for all.

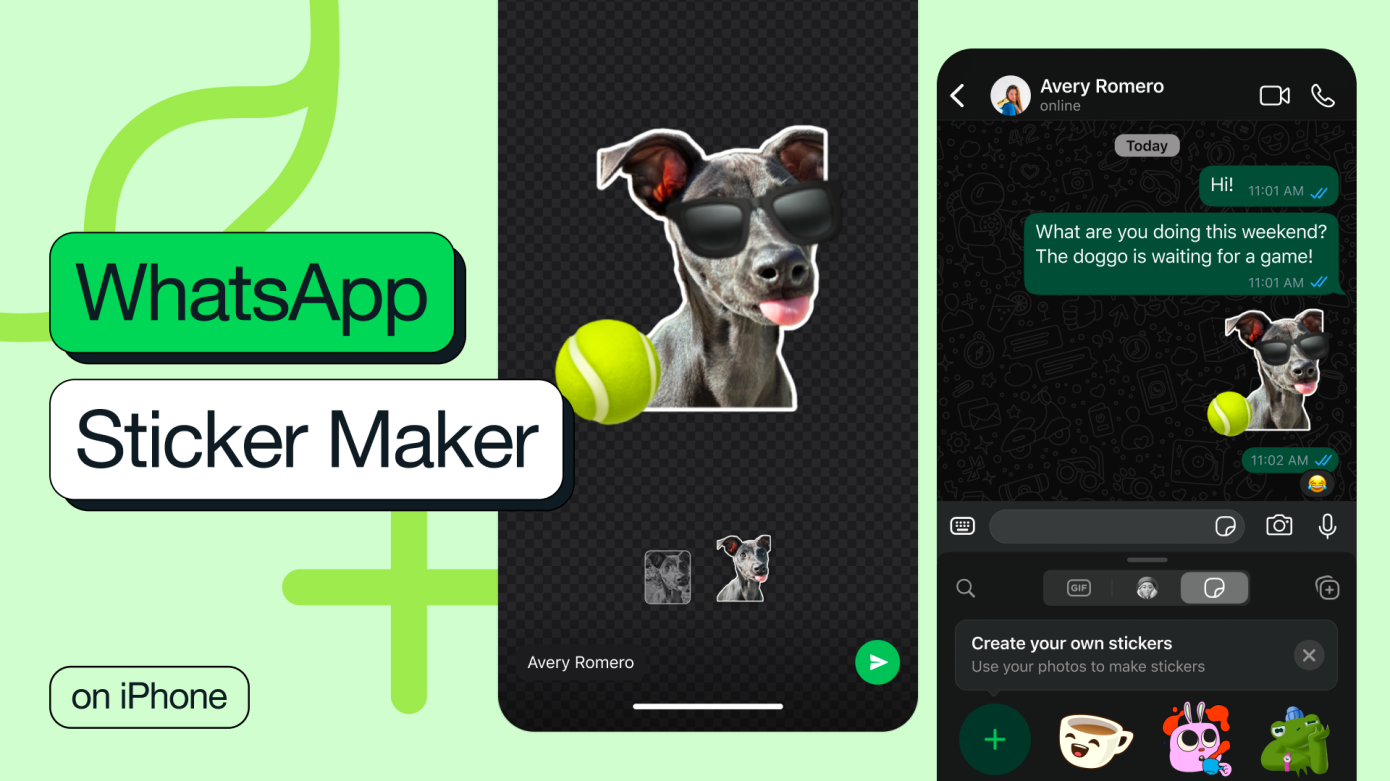

WhatsApp's parent company Meta is working on AI. Now it looks like WhatsApp is about to be on the receiving end of some of Meta's AI efforts. According to a recent report from WABetaInfo, WhatsApp could be getting AI image generation tools.

This feature comes in the form of something called "Imagine me". Basically, users will have to take photos of themselves, after which the Meta AI will be able to generate AI images of them on the fly. It sounds pretty cool, especially if you're looking to make new stickers of yourself and want some cool photos to use it with.

How it works, at least in the current beta, is that users have to take some photos of themselves to show the AI. After which, they can launch Meta AI and type "Imagine me" in the conversation. It also appears to be an optional feature that users can opt into. This means that if you're uncomfortable with sharing your photos with Meta, you don't have to use this feature.

The Imagine me feature isn't live yet. It is unclear when WhatsApp will introduce this AI tool to the app, but we'll keep our eyes peeled for when it eventually goes live.

YouTube is cracking down hard on ad blockers. If you'd like to avoid the hassle, then subscribing to YouTube Premium is a good way of getting rid of ads legally. On top of that, there are a bunch of other perks that you get with the subscription. If that wasn't enough, YouTube has announced several new features for Premium subscribers.

One of those features is called "Jump ahead". You can always scrub through videos on YouTube. There are also graphs on YouTube that show you the most popular bits. But with this new feature, users can just tap a button to skip to the parts of a video that YouTube's AI thinks is the "best".

In addition to the jump ahead feature, YouTube has also announced that Premium subscribers can now enjoy YouTube Shorts in picture-in-picture mode. This lets you minimize the YouTube app on your phone without interrupting your Shorts video while you do something else.

There are also other new features for YouTube Premium including conversational AI. On paper this sounds pretty cool. Users can ask the AI questions about a video they're watching or ask it to recommend something. This could be useful if the YouTube algorithm or search isn't giving you what you want.

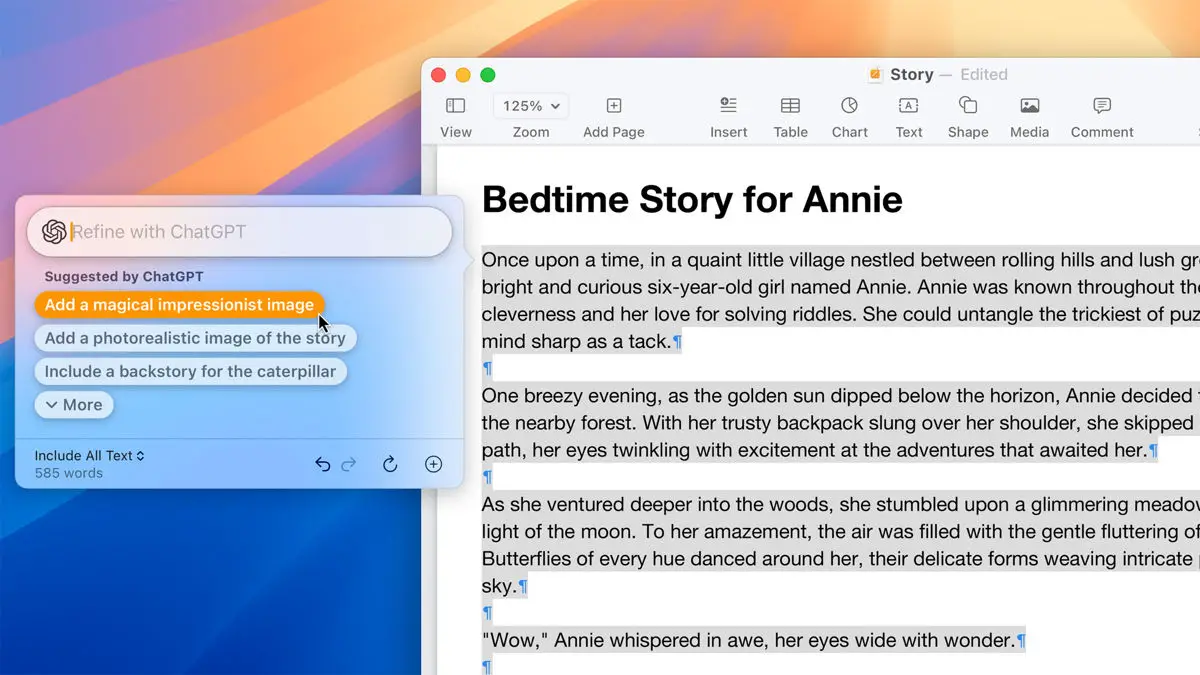

One of the biggest announcements Apple made at WWDC 2024 is Apple Intelligence. This is basically Apple's take on AI. The company also announced a partnership with OpenAI to leverage some of its tech for some of its AI features. But it seems that Elon Musk isn't thrilled with Apple working with OpenAI.

In a post on X, Musk calls the partnership an "unacceptable security violation". He also says that he will ban the use of Apple devices at his companies. Musk goes on to say that visitors who bring an Apple device will have to check them at the door where the devices will be placed in a Faraday cage.

For context, Musk was one of the co-founders of OpenAI alongside its current CEO, Sam Altman. However, Musk left the board back in 2018 and both him and Altman have been at each other's throats ever since.

We doubt that Apple will end their partnership with OpenAI over Musk's tweets, but it is an interesting reaction. That being said, Apple executive Craig Federighi did mention during an interview at WWDC 2024 that the company is open to the idea of using other AI models. He cites the possibility of giving users the choice of picking their own AI, such as Google Gemini.

With over 1 billion active users, Instagram has become one of the most powerful social media platforms for businesses to reach and engage with their target audience. However, simply having an account and posting content is not enough to guarantee success. To truly make the most out of this platform, you need to understand the secrets and strategies that can help drive your marketing efforts to new heights.

In this blog post, we will share 8 expert tips for navigating Instagram's hidden features and maximizing your marketing potential. From leveraging hashtags to utilizing the explore page, get ready to discover the insider knowledge that will take your Instagram game to the next level.

Get More Followers

Having a large and engaged follower base is crucial for the success of any business on Instagram. The more followers you have, the greater your potential reach and engagement will be. To increase your follower count, make sure to regularly post high-quality content that resonates with your target audience. You can also buy instant Instagram followers from reputable sources to give your account a boost. Additionally, engage with other users by liking and commenting on their posts, collaborate with influencers, and utilize hashtags effectively. By consistently implementing these strategies, you will see an increase in followers, leading to greater success on the platform.

Utilize Instagram Stories

Instagram Stories have become a popular feature for businesses to showcase their products or services in a more creative and engaging way. With over 500 million daily active users, this feature allows you to reach a wider audience and keep them engaged with your brand. Use features such as polls, quizzes, and countdowns to interact with your audience and build excitement around your brand.

You can also use Stories to highlight user-generated content and promote limited-time offers or promotions. By incorporating Instagram Stories into your marketing strategy, you can effectively capture the attention of potential customers and increase brand awareness.

Leverage User-Generated Content

User-generated content is one of the most powerful forms of social proof for businesses on Instagram. It not only showcases your product or service in a more authentic way but also helps build trust with potential customers. Encourage your followers to share their experiences with your brand and feature their content on your page.

This not only saves you time and effort in creating content but also creates a sense of community around your brand. Additionally, user-generated content is more likely to resonate with other users and potentially lead to an increase in followers and sales for your business.

Use Hashtags Effectively

Hashtags play a crucial role in helping your content reach a wider audience on Instagram. By using relevant and popular hashtags, your posts have the potential to show up in the explore page or appear in hashtag searches. Do some research to find out which hashtags are popular within your industry and use them strategically in your posts.

Furthermore, create branded hashtags to build a unique identity for your business and encourage users to use them in their posts. This not only increases your visibility but also helps you track user-generated content related to your brand. Remember to use a mix of popular and niche hashtags for optimal results.

Engage with Your Audience

Engaging with your audience is crucial for building relationships and fostering loyalty on Instagram. Respond to comments and messages, like and comment on their posts, and host interactive events such as Q&As or giveaways. This not only shows that you value your followers but also encourages them to continue engaging with your brand.

You can also use Instagram's new feature, "Guides", to curate posts related to a specific topic or product and share valuable information with your audience. By consistently engaging with your followers, you can create a loyal and dedicated community that will support and promote your brand on the platform.

Collaborate with Influencers

Partnering with influencers is an effective way to reach a larger audience and build credibility for your brand on Instagram. Find influencers within your niche and work with them to promote your products or services. This not only exposes your brand to their followers but also helps you tap into new audiences.

Make sure to choose influencers who align with your brand values and have an engaged following. You can collaborate with them for sponsored posts, takeovers, or giveaways. By leveraging the influence of others, you can boost your brand's visibility and potentially increase sales.

Utilize the Explore Page

The Explore page on Instagram is a powerful tool for businesses to reach new and potential customers. This feature curates content based on user behavior and interests, making it an excellent opportunity for your brand to get discovered by users who may not already follow you.

To increase your chances of appearing on the Explore page, make sure to use hashtags, post high-quality content, engage with other users, and utilize Instagram Stories. By consistently showing up on the Explore page, you can expand your reach and attract new followers and potential customers.

Analyze and Adjust Your Strategy Regularly

As with any marketing strategy, it's crucial to regularly analyze and adjust your efforts on Instagram. Use analytics tools such as Instagram Insights to track your performance and see what content resonates best with your audience.

Based on these insights, make necessary adjustments to your strategy, whether it's posting at different times or changing the type of content you share. This will help you stay relevant and keep up with the ever-changing algorithm of Instagram. By consistently analyzing and adjusting your strategy, you can ensure that your efforts on the platform are yielding the best results for your business.

Mastering the secrets and strategies of Instagram is crucial for businesses looking to achieve marketing success on this platform. By implementing these 8 expert tips, you can effectively navigate through the hidden features and gain a competitive edge over other brands. Remember to consistently create high-quality content, engage with your audience, utilize hashtags and explore page, and regularly analyze and adjust your strategy. With these techniques, you can take your Instagram marketing game to new heights and reach a larger audience for your business.

© 2023 YouMobile Inc. All rights reserved